I am no expert in gender bias or gender discrimination, but it is not a secret that the system security community is male-dominated and does not have a good reputation for being a friendly environment for women.

There are tons of great initiative and groups to support women in cybersecurity and many many people more knowledgeable than me on the topic. But the Security Circus contains a lot of data - so I though I could use that to provide some actual measurement of the extent of the problem in our community.

Let’s go.

Base Rate for Comparison

Let’s start by getting some general figures to put our results into context.

Already at the bachelor level, computer science is doing particularly bad. Pretty much everywhere in the world the percentage of women is below 20% and this value is considerably less than in other STEM fields. For instance, the average for CS in Europe is 19.8% (vs 26.7% in Engineering and 54.8% in Natural Sciences and Mathematics). source

However, we can assume most researchers who publish in the Top4 have a Ph.D., or are in the process of getting one. Here I could not find figures worldwide, but in the US, roughly 20% of the Ph.D. degrees in Computer Science are awarded to women, and this has been more or less stable over the past 20 years (source).

A recent study published in 2020 in the Communication of ACM ("A Bibliometric Approach for Detecting the Gender Gap in Computer Science", Mattauch et al.) measured the gender distribution among the authors of 19 CS conferences (the only one in security was NDSS) between 2012 and 2017. The authors found that only 9.6% of the authors were women. However, this value could be inaccurate as the authors decided to remove from their analysis all Asian names, as they were too difficult to classify automatically.

Dataset

For this study, I took all papers published in the Top4 System security conferences (IEEE S&P, Usenix Security, NDSS, ACM CCS) between 2000 and 2020. This includes 4299 papers written by 7524 distinct researchers (if you want to know more about the data, please check the Security Circus page).

I then used Namsor, a popular service that provides APIs to infer the

gender of a given name. Since the first name alone is not very indicative,

I always considered the pair firstname-lastname. The service returns

a likelyGender as well as a probability the provides an indication of the

confidence of their classifier. For roughly 40% of the authors, the confidence

was above 90%. But still for a stunning 12% of the authors the confidence

was below 55% (so the algorithm was giving almost 50-50 percent chances).

As already mentioned by Mattauch et al., the problem affected mostly asian

names which are often gender-neutral. But since those are a very large

part of the dataset I did not want to simply remove them. Thus.. I started

a very long and super-tedious process of manual validation of roughly 2K

names. The process (not very scientific) was the following. I googled

"name lastname" + security on google images. If all results were consistent

with the result returned by Namsor I moved on.

Otherwise, if there was a single researcher profile returned, I took that

as a result. If not, I extracted the most recent affiliation of the

researcher from my DB and added that to the query. If more profiles were

returned I tried to briefly check their pages to take a decision. The

process was difficult as sometimes two different but perfectly reasonable

results existed. For instance, Xin Liu (female professor at UCDavis)

published a paper at NDSS 08 while the homonym Xin Liu (male Ph.D. student

at Univ. of Maryland) co-authored one at CCS 21. It was also error prone and not always

successful, as sometimes the query returned no results I could use to take

a decision.

Overall, I manually modified 654 entries, 142 from Male to Female and 512 from Female to Male.

The process toke many many evenings, and I am sure there are still quite a few misclassification in the system, but overall I am confident I addressed most of the mistakes. Or at least I hope so…

Results

|

|

Overall, women accounted for only 13.8% (1036 out of 7524) of all researchers in the dataset. |

|

|

Out of 4299 publications, 1502 (34.9%) had a woman as co-author. But only 23 papers over 21 years had only women authors!! |

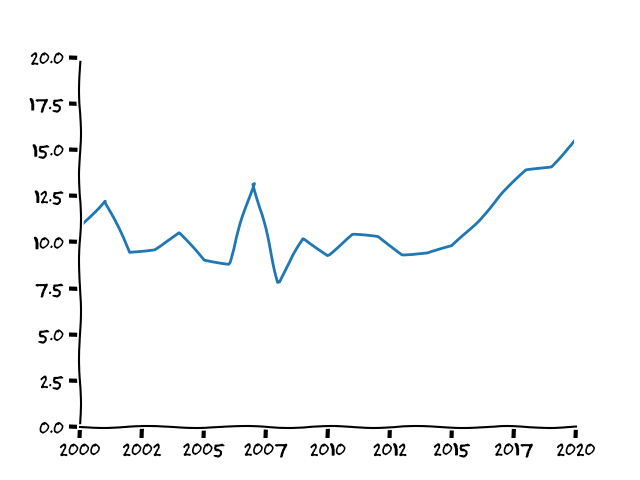

Breakdown Per Year

The yearly trend seems to suggest that after 2017 the number of women in our conferences increased significantly.

I am optimistic and I like to believe this is in fact the case.

But for those of you more pessimistic, another possible explanation is that the number of authors is increasing exponentially over the years, and over the past five years China became the second country for number of papers (it was almost negligible until 2010). And since most of the errors in the gender classification are on asian names, and prevalently from males classified as females.. small errors in the data could result in an inflation on the women percentage over the past 3-4 years. Just saying.

Breakdown Per Conference

IEEE S&P |

13.3% |

Usenix Security |

12.3 |

NDSS |

12.8 |

ACM CCS |

13.1 |

While I was not expecting to find much here.. there is a slightly higher percentage of women in conferences that focus less on system topics - wrt other security topics, such as applied crypto.

But I don’t know.. the difference is small and it could just be a random fluctuation.

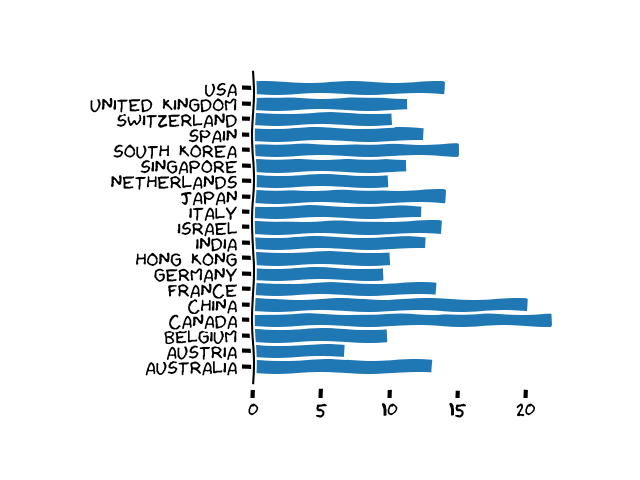

Breakdown per Country of Affiliation

This shows the percentage of women grouped by country (of affiliation, not origin) for those countries with > 50 papers:

Numbers here vary quite a lot, ranging from 6.7% in Austria to 21.9% of women in Canadian institutions.

Why? I really wish I knew…

Breakdown per Number of Papers

Finally, I divided the data according to the number of papers published by each researcher in the Top4 and re-computed the percentage of women in each group:

10+ papers |

6.5% |

5-9 papers |

11.8% |

2-4 papers |

12.4% |

1 paper |

15.1% |

|

|

The data shows that the lack of women is much more serious at the top of the scale, where we find mostly professors who lead large groups, than at the bottom, where we find mainly young students !! |